Platform

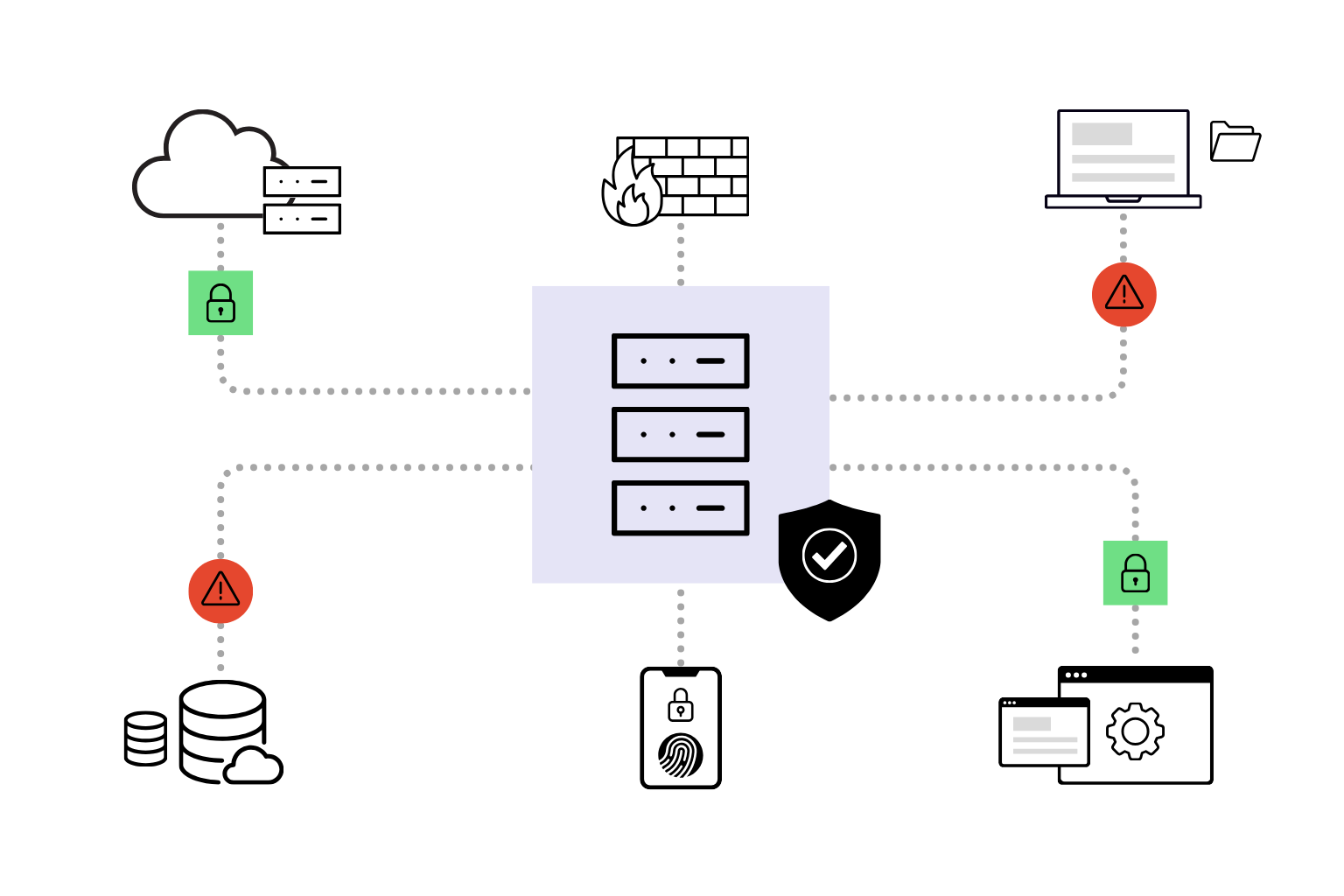

Explore Inspectiv’s AI-enabled platform that integrates Bug Bounty, Pentesting, Feature Testing, and VDP, designed to cut through noise and deliver signal-driven results.

Platform

Explore Inspectiv’s AI-enabled platform that integrates Bug Bounty, Pentesting, Feature Testing, and VDP, designed to cut through noise and deliver signal-driven results.

Bug Bounty

Continuously discover high-impact vulnerabilities, without the overhead of traditional bug bounty programs.

Penetration Testing

Stay audit-ready and reduce risk with expert-led testing and flexible retesting support.

See Inspectiv in Action!

Schedule a live demo to see how our platform helps you manage vulnerabilities, reduce noise, and stay compliant.

See Inspectiv in Action!

Schedule a live demo to see how our platform helps you manage vulnerabilities, reduce noise, and stay compliant.

Test how your AI actually behaves in production across prompts, APIs, memory, vector databases, agents, and MLOps pipelines.

Inspectiv's comprehensive AI and LLM security testing is human-led and utilizes an adversarial model, designed to secure systems against real-world attacks across the entire production (or staging/dev) environment. The testing covers a wide range of highly impactful vulnerabilities, including prompt injection and jailbreaks, authorization controls, data leakage, and input/output manipulation. Furthermore, it addresses specialized risks within the AI stack, such as Vector Databases (RAG), API and orchestrator communication, multi-agent systems, code execution environments, and the Machine Learning Operations (MLOps) supply chain.

The testing typically covers a wide range of methods, with our researchers utilizing the OWASP Machine Learning Top 10 comprehensively. We test for custom prompt injection and jailbreak attacks, authorization & access controls, data leakage, and input/output manipulation. Furthermore, our testing addresses specialized risks within the AI stack, such as Vector Databases (RAG), API and orchestrator communication, multi-agent systems, code execution environments, and the Machine Learning Operations (MLOps) supply chain. Our AI and LLM testing aims to uncover vulnerabilities that are hidden within built-in functionality or into the architecture itself.

Inspectiv delivers AI security testing that adapts to your team’s size, maturity, and deployment schedule. Unlike traditional consultancies and off‑the‑shelf scanning tools, we pair AI security experts with your specific model architecture, training data pipelines, and deployment environment.